Overview

Motion Planning Augmented Reinforcement Learning for Long-Horizon Visual Robot Manipulation was a personal project I worked on in Helper Lab, Sungkyunkwan university supervised by professor Mun-Taek Choi.

This research project is based on my Master’s thesis in Intelligent Robotics.

Goal

- The objective of this study is to enhance the learning efficiency of Reinforcement Learning (RL) by incorporating physics-informed guidance, targeting a mobile manipulator in a simulated household environment.

Description

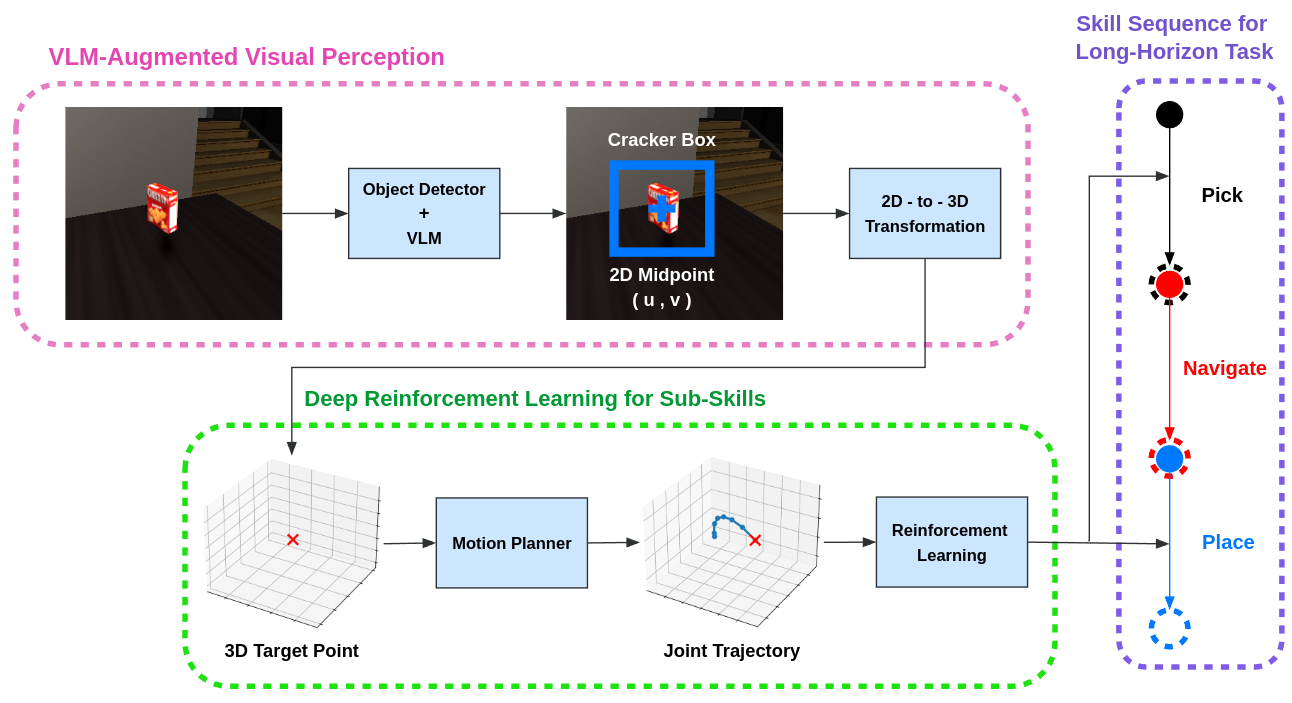

The methodology builds upon the framework proposed by Gu et al. in “Multi-Skill Mobile Manipulation for Object Rearrangement” (Paper Link).

To address sparse rewards and inefficient exploration in RL, motion planning is incorporated into the reward function as guidance.

The task is decomposed into pick, place, and navigation sub-skills, which are executed sequentially through point-based skill chaining.

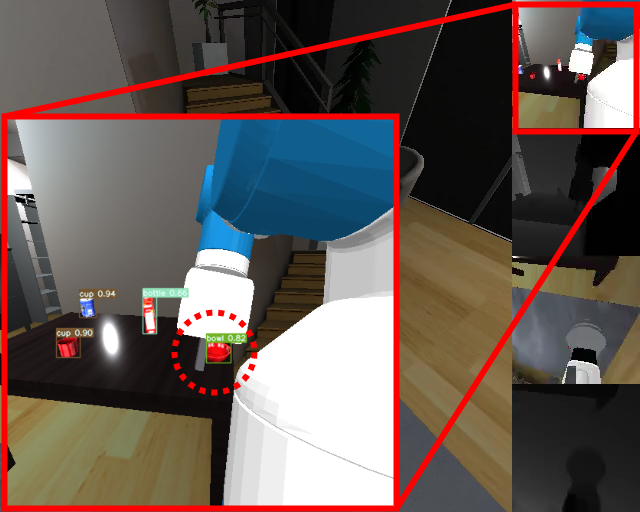

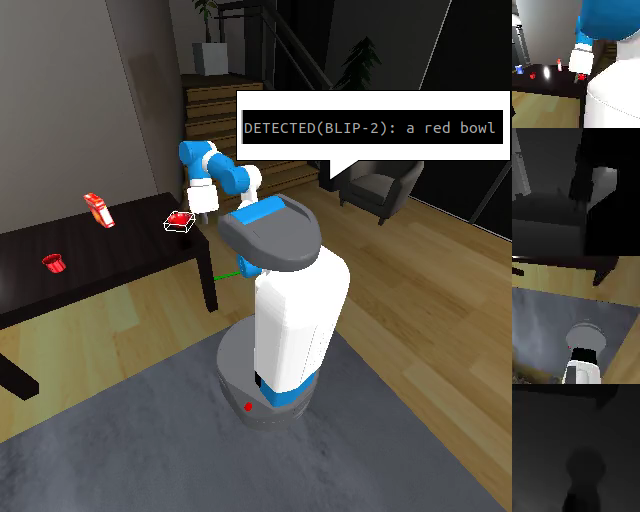

Vision-Language Model (VLM) augmented object detection is implemented by combining YOLOv7 with BLIP-2, enabling open-vocabulary semantic recognition.

The system is developed using the Habitat Simulator.

The robot setup is based on the Fetch Robot, consisting of a differential-drive mobile base and a 7-DOF arm equipped with a parallel-jaw gripper. RGB-D cameras are mounted on both the head and the arm.

Note that abstract grasping is used, where physical contact dynamics are not modeled, and grasps are considered successful when within a specified positional threshold.

As a result, each sub-skill demonstrated faster convergence in success rate compared to the baseline distance-based methods without motion planning augmentation.

Furthermore, the cumulative success rate for long-horizon tasks reached a relatively high value of 0.72.